-

- Downloads

remove obsolete images and scripts; add new artificialbeacons image for updated content

Showing

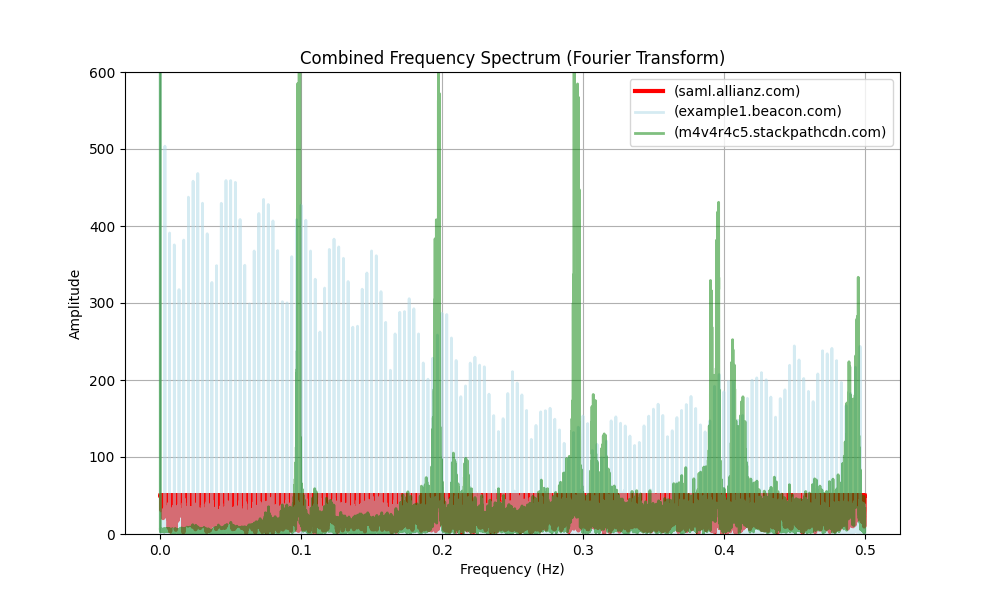

- Codes/FTT_autocorrelation/FFT3.png 0 additions, 0 deletionsCodes/FTT_autocorrelation/FFT3.png

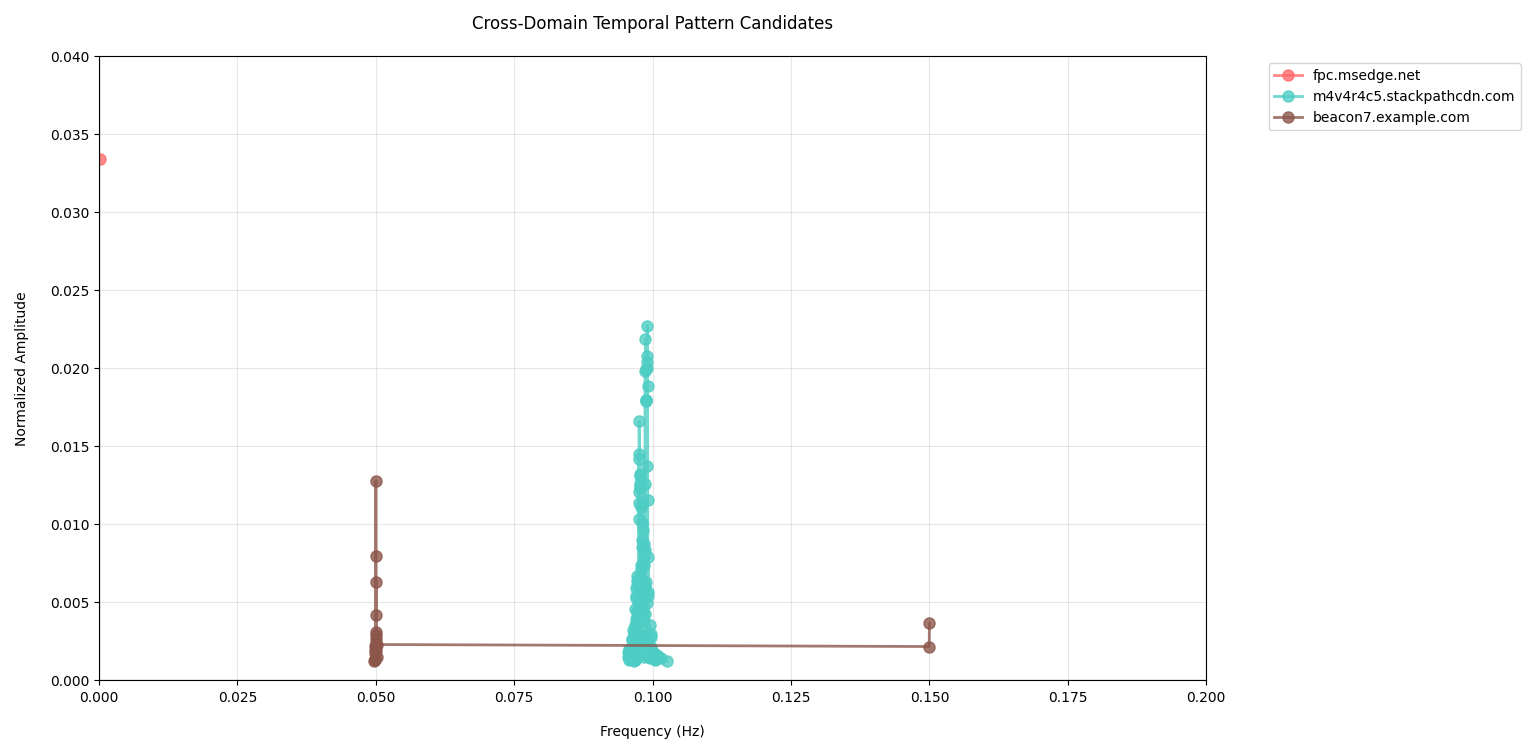

- Codes/FTT_autocorrelation/FFT_AutoCorrelation.py 208 additions, 67 deletionsCodes/FTT_autocorrelation/FFT_AutoCorrelation.py

- Codes/FTT_autocorrelation/FFT_AutoCorrelation_Zoom.py 0 additions, 84 deletionsCodes/FTT_autocorrelation/FFT_AutoCorrelation_Zoom.py

- Codes/FTT_autocorrelation/alltop.png 0 additions, 0 deletionsCodes/FTT_autocorrelation/alltop.png

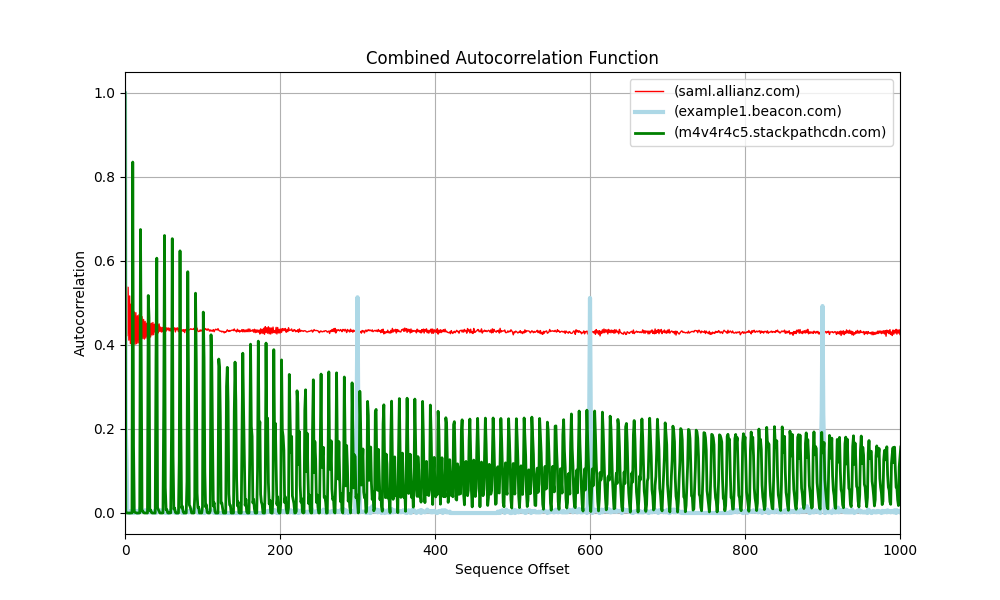

- Codes/FTT_autocorrelation/artificialbeacons.png 0 additions, 0 deletionsCodes/FTT_autocorrelation/artificialbeacons.png

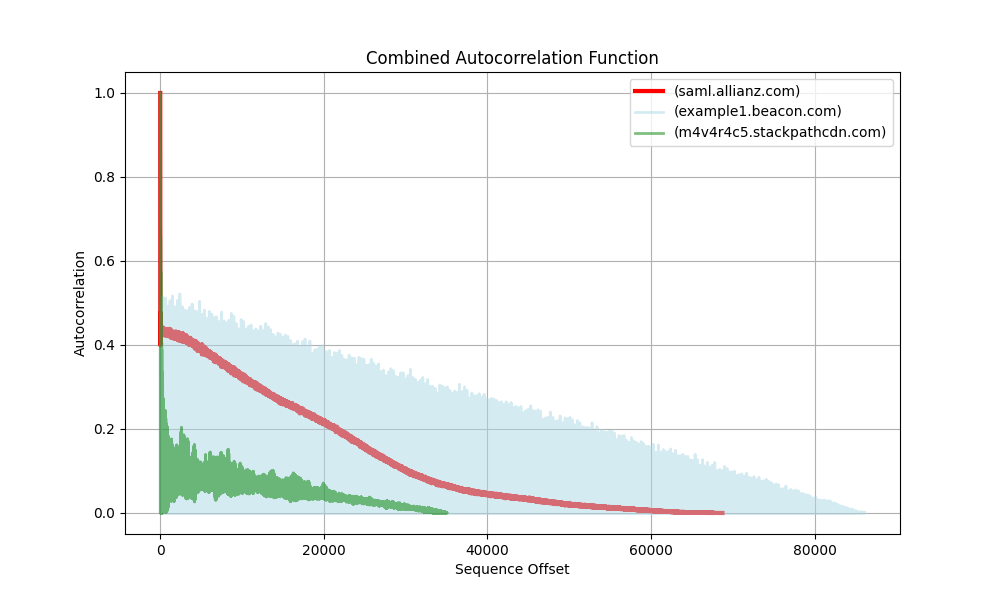

- Codes/FTT_autocorrelation/auto3.png 0 additions, 0 deletionsCodes/FTT_autocorrelation/auto3.png

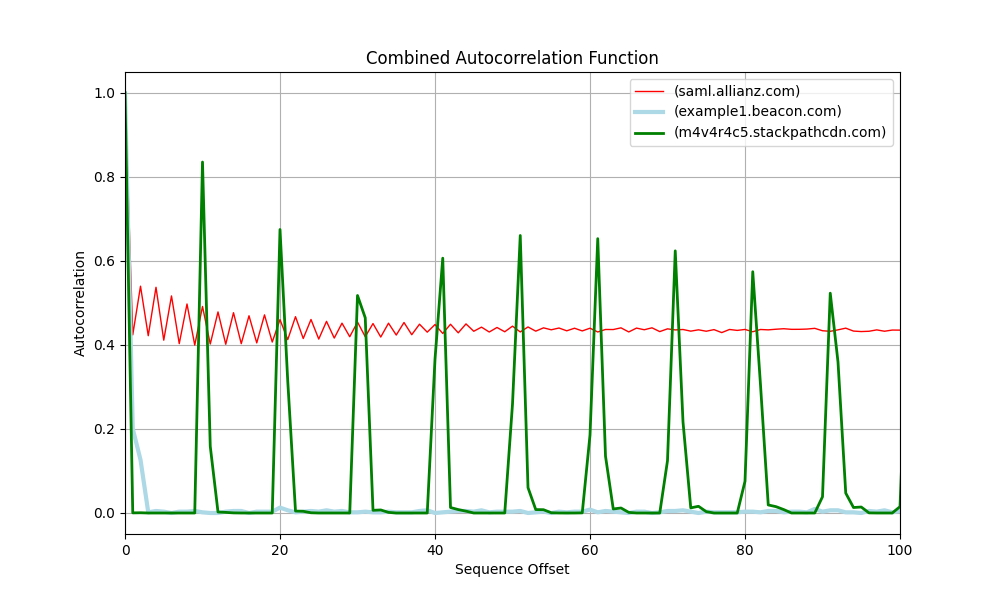

- Codes/FTT_autocorrelation/auto3_100.png 0 additions, 0 deletionsCodes/FTT_autocorrelation/auto3_100.png

- Codes/FTT_autocorrelation/auto3_1000.png 0 additions, 0 deletionsCodes/FTT_autocorrelation/auto3_1000.png

Codes/FTT_autocorrelation/FFT3.png

deleted

100644 → 0

64.8 KiB

Codes/FTT_autocorrelation/alltop.png

0 → 100644

57.2 KiB

104 KiB

Codes/FTT_autocorrelation/auto3.png

deleted

100644 → 0

46.5 KiB

73.4 KiB

92.2 KiB